Note: This guide does not cover installing Element Call in standalone mode, which is not needed for making calls within clients, and takes inspiration from the excellent self-hosting guide provided by the folks from the Element Call team.

Why deploy your own Element Call?

If you’ve recently updated ElementX (a popular Matrix client), you might have noticed video calls no longer work out of the box (as of April 2025). That’s because ElementX has stopped using Element’s hosted LiveKit backend as a courtesy service, and now requires you to set up your own Element Call backend (also known as the MatrixRTC backend) if you want to make calls.

I’ve just gone through this process with my own Matrix homeserver, and while it’s not terribly difficult, there are several moving parts to configure. This guide documents what I learned so you can get up and running with minimal fuss.

What we’ll be setting up

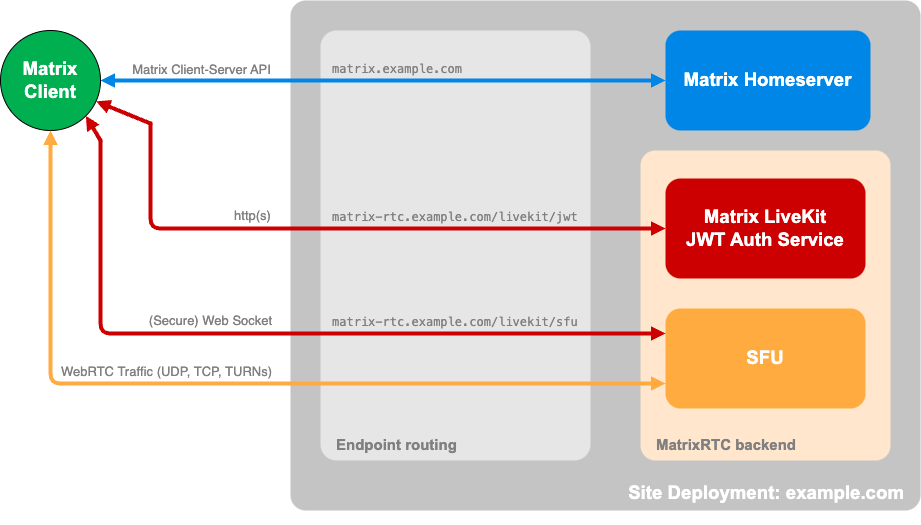

We’ll be deploying:

- LiveKit - a WebRTC Selective Forwarding Unit (SFU) that handles the actual media streaming

- A JWT authentication service - to securely connect Matrix users to LiveKit

- Necessary reverse proxy configuration

- DNS settings

- Homeserver configuration changes

Prerequisites

This guide presumes that:

- You use a Matrix homeserver (in this case, Synapse)

- You want to deploy with Docker Compose

- There is a reverse proxy in front of your homeserver (I provide Apache, Nginx and Caddy example configs)

- You have access to modify your DNS records

- And finally.. that all of the above makes sense so far..

Create the Docker Compose configuration

First, create a new directory for your Element Call setup and create a docker-compose.yml file:

services:

auth-service:

image: ghcr.io/element-hq/lk-jwt-service:latest

container_name: element-call-jwt

hostname: auth-server

environment:

- LIVEKIT_JWT_PORT=8080

- LIVEKIT_URL=https://matrixrtc.yourdomain.com/livekit/sfu #Change

- LIVEKIT_KEY=devkey

- LIVEKIT_SECRET=yoursecretkey #Change

- LIVEKIT_FULL_ACCESS_HOMESERVERS=yourdomain.com #Notes on this below

restart: unless-stopped

ports:

- 8070:8080 #Change 8070 to whichever port you want JWT to be available on locally

livekit:

image: livekit/livekit-server:latest

container_name: element-call-livekit

command: --config /etc/livekit.yaml

ports:

- 7880:7880/tcp

- 7881:7881/tcp

- 50100-50200:50100-50200/udp

restart: unless-stopped

volumes:

- ./config.yaml:/etc/livekit.yaml:ro

Be sure to replace yourdomain.com with your actual domain, and set a proper secret key instead of ‘yoursecretkey’ (a long string of letters and numbers will do).

LIVEKIT_FULL_ACCESS_HOMESERVERS is a feature that limits access to the JWT service to those from the listed homeservers, at least to create rooms. Other users over Federation can still join that call – but not create. For this to work you must keep the auto_create: false option as shown in the LiveKit config below.

Finally, don’t forget to port forward 7881 TCP and 50100-50200 UDP from your router/firewall.

Create the LiveKit configuration

Create a file named config.yaml in the same directory:

port: 7880

bind_addresses:

- "0.0.0.0"

rtc:

tcp_port: 7881

port_range_start: 50100

port_range_end: 50200

use_external_ip: false

room:

auto_create: false

logging:

level: info

turn:

enabled: false

domain: localhost

cert_file: ""

key_file: ""

tls_port: 5349

udp_port: 443

external_tls: true

keys:

devkey: "yoursecretkey" #Change

Important: Make sure the secret key matches what you specified in the Docker Compose file.

You can change the rtc UDP ports in the config above (and it’s corresponding entries in the docker compose file and your router/firewall port forwarding rules) to any range between 50000-60000. LiveKit advertises these ports as WebRTC host candidates and each participant in the room will use two ports.

Configure your reverse proxy

You’ll need to set up a reverse proxy to handle the WebSocket connections and HTTP requests. Here is an example configuration for Apache:

RequestHeader setifempty X-Forwarded-Proto https

ProxyTimeout 120

<Location "/sfu/get">

ProxyPreserveHost on

ProxyAddHeaders on

ProxyPass "http://127.0.0.1:8070/sfu/get"

ProxyPassReverse "http://127.0.0.1:8070/sfu/get"

</Location>

<Location "/livekit/sfu">

ProxyPreserveHost on

ProxyAddHeaders on

# WebSocket-specific configuration

ProxyPass ws://127.0.0.1:7880 upgrade=websocket flushpackets=on

ProxyPassReverse ws://127.0.0.1:7880

</Location>

And an example configuration for nginx:

location /sfu/get {

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_pass http://127.0.0.1:8070/sfu/get;

}

location /livekit/sfu/ {

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

proxy_buffering off;

proxy_pass http://127.0.0.1:7880/;

}

And Caddy:

matrixrtc.yourdomain.com {

handle /sfu/get* {

reverse_proxy 127.0.0.1:8070

}

handle_path /livekit/sfu* {

reverse_proxy 127.0.0.1:7880

}

}

Remember to adjust the local IP addresses if your services aren’t running on the same machine as your reverse proxy, and the ports if you’ve changed those from the example docker compose configuration.

Configure DNS

Create a new DNS A record to route to your server, here I create a new subdomain:

matrixrtc.yourdomain.com A YOUR-SERVER-IP

Update your Synapse configuration

Edit your homeserver.yaml to enable the required MSCs for Element Call:

experimental_features:

# MSC3266: Room summary API. Used for knocking over federation

msc3266_enabled: true

# MSC4222: needed for syncv2 state_after. This allows clients to

# correctly track the state of the room.

msc4222_enabled: true

# MSC4140: Delayed events are required for proper call participation signalling. If disabled it is very likely that you end up with stuck calls in Matrix rooms

msc4140_enabled: true

# The maximum allowed duration by which sent events can be delayed, as

# per MSC4140.

max_event_delay_duration: 24h

rc_message:

# This needs to match at least e2ee key sharing frequency plus a bit of headroom

# Note key sharing events are bursty

per_second: 0.5

burst_count: 30

# This needs to match at least the heart-beat frequency plus a bit of headroom

# Currently the heart-beat is every 5 seconds which translates into a rate of 0.2s

rc_delayed_event_mgmt:

per_second: 1

burst_count: 20

Configure the well-known file

The well-known file tells clients where to find your Element Call service. Create or update the .well-known/matrix/client file on your main domain’s web server and add the org.matrix.msc4143.rtc_foci key:

{

"m.homeserver":{

"base_url":"https://matrix.yourdomain.com"

},

"org.matrix.msc4143.rtc_foci":[

{

"type":"livekit",

"livekit_service_url":"https://matrixrtc.yourdomain.com"

}

]

}

Make sure this file is served with the correct MIME type (application/json) and appropriate CORS headers:

<Location "/.well-known/matrix/client">

Header set Access-Control-Allow-Origin "*"

Header set Content-Type "application/json"

</Location>

🚀 Start it up

Restart your homeserver and reverse proxy to pickup the configuration changes, then start your services:

docker-compose up -d

Troubleshooting

Double check if you can now make calls:

- Open ElementX or Element Web on your phone or desktop

- Start a direct call with someone or create a call in a room (make sure you start the call otherwise you’ll be testing the other persons setup)

- You should see the Element Call interface appear and connect successfully

If something isn’t working:

- Check the logs:

docker logs element-call-jwt

docker logs element-call-livekit

-

In Element Web, click ‘View’ then ‘Toggle Developer Tools’. In developer tools you can check the console logs and the network tab to see which requests are not working when you make try to join a call. Often the /sfu/get request doesn’t work due to an incorrect reverse proxy setup, usually lacking the right CORS headers.

-

Try testing with a community tool called testmatrix . You can provide it with a username and session token and it will give in return a link and JWT token to test the call connection.

And done.

Setting up your own Element Call backend isn’t too difficult once you understand the components involved. The most time-consuming part is getting all the configuration right, especially the reverse proxy and DNS settings.

If you run into issues or have suggestions for improving this setup, feel free to reach out below..